Home » GPU

GPU

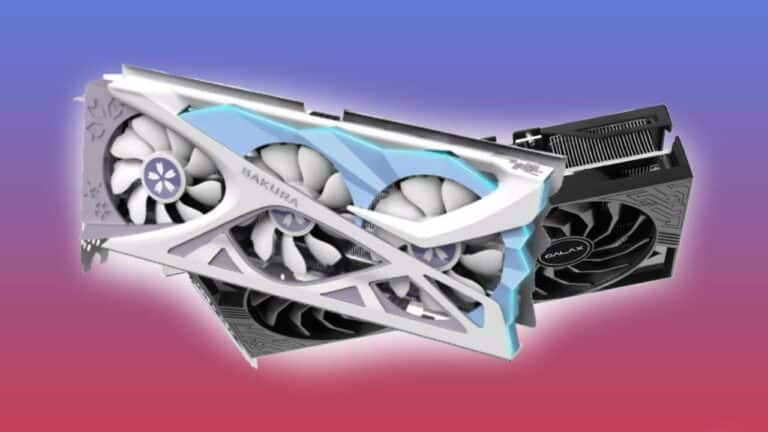

When you are building your own gaming PC, you want to make sure you’re experiencing the graphics in the way they were made to be seen. The Graphics Card (or GPU) is the key to making sure you’re getting the best visuals out of your build. We’ve got a GPU for every build and budget, from high-end to low-cost, you’ll be able to find the right fit for you.

Latest GPU Articles

Yeston releases new RTX 4060 Ti GPU which costs just as much as a 4070

Shopping around for a new GPU comes with many things to consider.

MSI Gaming X Trio RTX 3090 review – is the 3090 worth it?

Nvidia has had many GPUs over the years with the top-spec cards

Download more VRAM speed for the 7900 GRE thanks to AMD update

Downloading more RAM might be a joke, but not the ability to

RTX 40 Series supply is reportedly slowing down ready for the 50 series

As it’s been some time since the release of the Ada generation

Amazon’s Big Spring Deal finally addresses our one main problem with the RX 7700 XT

Sure, AMD’s lineup often carries a premium, but there’s a golden opportunity

Big Spring Sale drops entry-level Nvidia Ada GPU to lowest price again

Amazon’s Spring Deal Days are making waves, especially for those in the

Nvidia is once again changing its GPU SKUs

It’s not as big of a deal as other releases that may

Another Intel Arc driver dropped, yet another boost in performance

Intel has brought out another driver update to its Intel Arc range

AMD is upgrading RDNA 3, but not for its GPUs

AMD is not quite yet announcing anything about its next-generation f graphics

Best GPU for Intel i7 – our top compatible graphics cards

If you’re looking for the best GPU for Intel i7 processors then

AMD’s flagship GPU now just above RX 7800 XT price thanks to Big Spring Sale

Amazon’s Big Spring Sale seems to have made some custom AIBs incredibly

Shocking Big Spring Sale sends RX 7900 XTX price under $700 so don’t miss out!

AMD’s flagship just got an insane Big Spring Sale deal on Amazon,

Best GPU for Dragon’s Dogma 2 – our top choices for the sequel

With plenty of years between the two games, hardware has changed so

AMD Radeon RX 6800 review – cheap 4K gaming

Considering what graphics cards have to offer today, it wouldn’t be terrible

AMD Radeon RX 6800 XT review: AMD’s comeback to competition

AMD’s had a tough time trying to gain some traction in the

Predator Intel Arc A770 review: the underdog that keeps improving

A newer contender to the competitive graphics card market, we get a

PNY GamingPro RTX 3070 review: a strong value GPU to this day

When it comes to current-day graphics cards it can be a hard

Even RTX 40 Super cards are falling under RRP now

A couple of German sites have noticed the trend in their retailer

Your 1660 could get a needed boost with this mod

If you’ve been thinking of upgrading you might not need to just

This is what current GPU pricing should have been

With the current-gen GPU pricing being high and not being too great,

Best GPU for i7-13700K – our top graphics cards for Intel i7 13th Gen

The middle of the range deserves great power as well, so you

Nvidia GTX is finally coming to an end

Although we might have had the RTX generation for a few years

Best GPU for Horizon Forbidden West – our top gaming cards

Looking for the best GPU for Horizon Forbidden West? Then we bring

ASUS RX 7000 GPUs get price cuts and cashback deals in Europe

Now could be the time to snatch up a new AMD graphics

Unsurprisingly this RTX 4060 Ti 8GB price also tumbles under MSRP

We just posted about how a 16GB model had fallen in price

Nvidia’s 4060 Ti 16GB has dropped to the same price as an 8GB model

Although a controversial choice of graphics cards, an Nvidia RTX 4060 Ti

Best GPU under 700 – top $700 graphics cards for gaming 2024

On a larger budget and some options to go from, you might

Best Nvidia GPU – our top GPU for gaming on PC

The best Nvidia GPUs are in large part, the best graphics cards money can buy, a

Nvidia is improving it’s AI GPU delivery times

There have been reports that Nvidia’s AI GPUs are taking a lot

AMD RX 7900 GRE expands to the UK as well

If you’re looking for a new GPU towards the top of the

Best GPU for Nightingale – covering Nvidia & AMD graphics cards

If you are looking for the best GPU for Nightingale, you are

Should you wait for Nvidia’s 50 series GPUs?

Considering a new GPU is hard when there always seems to be

Gigabyte Gaming OC RX 7600 XT review – is the 7600 XT worth it?

RDNA 4 has expanded once again alongside Nvidia’s 40 series. With the

Best GPU for Skull and Bones – our top GeForce & Radeon picks

Ubisoft has finally launched its action-adventure game, Skull & Bones, and we

Gigabyte Windforce RTX 4080 Super review – is the 4080 Super worth it?

The last of the 40 series upgrades, the RTX 4080 Super review

Best graphics settings for Apex Legends – important settings to change

Apex Legends is a beloved battle royale game that requires high-quality graphics

ZOTAC Twin Edge Into the Spiderverse RTX 4060 Ti 8GB review

Nvidia hasn’t had the best naming schemes and has had a fair

ASUS TUF OC RTX 4070 Super review – is the 4070 Super worth it?

Nvidia has gone Super on its 40 series graphics cards, the lowest

Best GPU for Helldivers 2 – our GeForce & Radeon picks

Here’s our recommendations for both Nvidia and AMD enthusiasts looking to play Palworld

What is a normal CPU & GPU temperature while gaming? – How hot is too hot?

Wondering about the normal CPU & GPU temperature for gaming? You’ve come

RTX 3050 6GB vs RTX 2060 – which 6GB GPU is best?

These days, 8GB or higher is what many buyers look for in

RTX 3050 6GB vs RTX 4060 – a generation of difference, plus more

Now that Nvidia have introduced the RTX 3050 6GB to the market,

RTX 3050 8GB vs RTX 3050 6GB – does 2GB make a difference?

We’ve previously reviewed the RTX 3050 for the 8GB model, but how

RTX 3050 6GB vs GTX 1080 – does the new budget topple the old OG?

Looking for how the RTX 3050 6GB vs GTX 1080 compare, then

Nvidia’s RTX 4080 is still more expensive than the RTX 4080 Super

Despite being replaced by a newer model, Nvidia’s standard RTX 4080 is

The RTX 4070 Ti is plummeting in price now that Super is out

Looking for the best budget GPU right now? Well, the RTX 4070

You may want to reconsider picking up Nvidia’s budget RTX 3050 6GB model

Nvidia’s latest budget card, the RTX 3050 6GB is arriving this month.

Best GPU for Palworld – our top Nvidia and AMD graphics cards

Here’s our recommendations for both Nvidia and AMD enthusiasts looking to play Palworld

Best GPU for Ryzen 7 5700X3D – the top graphics card for 5700X3D

Trying to pair up your new CPU with great graphics, we find

Score a custom $3,000 RTX 4080 Super PC build for free in this Nvidia-backed contest

The good folks over at r/buildapc on Reddit are hosting an RTX

RTX 4080 Super is selling out on Amazon, here’s where to buy one at MSRP

Nvidia’s RTX 4080 Super has just launched and they’ve been selling well

RTX 4080 Super reviews are finally here, this is what they have to say

We’re doing a RTX 4080 Super review roundup to give you a

Is the RTX 4080 Super launch overshadowed by 4090 Super rumors?

The RTX 4080 Super is the latest entry to Nvidia’s 40 series,

RTX 4080 Super release time & countdown – US, Canada, UK

*Update* the RTX 4080 Super release time has been and gone! Take

Nvidia’s RTX 4080 Super is already available to buy on Amazon – here’s how

Nvidia’s RTX 40 series Super refresh is just about wrapping up as

ASUS partner with Noctua again for 4080 Super, but we don’t really need it

With the RTX 4080 Super dropping today, news of a very special

Best RTX 4080 Super graphics card: the top 4080 Super GPU models

Looking for your next graphics card, then this selection of the best

Does Nvidia’s RTX 4080 Super launch spell the end for the poor RTX 4080?

The RTX 4080 Super has already been announced by Nvidia alongside the

RTX 4080 Super benchmarks will have to wait as review embargo delayed by Nvidia

Nvidia has announced that RTX 4080 Super reviews will suffer a minor

RTX 4080 Super vs 4090 – how close does it get?

As the Ada series gets an upgrade the gap between them falls,