Home » Gaming Monitor

Gaming Monitor

The humble gaming monitor is one of the most important purchases of any gaming setup, regardless of budget or usage. For that reason, we’ve spent countless hours reviewing some of the best monitors the marketplace has to offer. We offer comprehensive how-to’s, informative best-of guides, and the latest news on everything monitor related.

Table of Contents

Gaming Monitor: How to Choose the Right One For You

Over the years, monitors have not only been evolving in their shape and size, but they’ve also been adapting their ability to accommodate gamers’ ever-growing needs for smoothness and immersion.

Nowadays, gamers have a whole host of exciting monitors to choose from, including monitors that are specifically tailored towards gamers (high refresh rate/ low response time), stunning curved monitors, and even 4K gaming monitors – bringing the highest levels of gaming enjoyment to the table.

We constantly review & test the best gaming monitor, so no matter what you want from yours we will have something that suits your exact needs and requirements!

144Hz Monitor

The 144hz monitor is considered as one of the best when it comes to gaming. A 144Hz monitor comes with a refresh rate of 144hz; this is the number of times your display can physically refresh itself. Refresh rate is a very important factor in gaming because the higher the refresh rate, the smoother the gameplay will be. This not only adds to the level of immersion you get while playing but also gives you an advantage over your competition when used in tandem with variable refresh technology.

Despite there being much quicker monitors now available, the 144Hz monitor is considered one of the best in terms of price to performance. Furthermore, unless you’re playing at the highest level, you really won’t need that much more than 144hz anyway.

Curved Monitor

A curved monitor is pretty much what it says on the tin; it’s a monitor that has been curved for aesthetic and immersive purposes. The curved monitor has been growing in popularity over the years thanks to cheaper models being released into the consumer marketplace.

There are many benefits to purchasing a curved monitor, the most interesting of which is how the curvature of the screen matches the shape of your eye to lessen the strain you experience.

While, historically, curved monitors haven’t been the best for gaming, that ideology has certainly changed over the last 5-10 years. The best curved gaming monitor offers up plenty of gamer-related specs, including fast refresh rates, low response times, and excellent colour accuracy.

Ultrawide Monitor

Ultrawide monitors are considered the perfect middle-ground for your gaming and production needs. While ultrawide monitors are tailored more towards productivity purposes, there are plenty of ultrawides that also offer up great gaming specifications.

That said, the main benefit of an ultrawide gaming monitor is the screen real-estate that it provides its users. Gone are the days of individuals needing dual monitors for their work/gaming needs. Nowadays, 34” screens offer high resolutions and decent aspect ratios, providing all the space you’ll ever need on one screen.

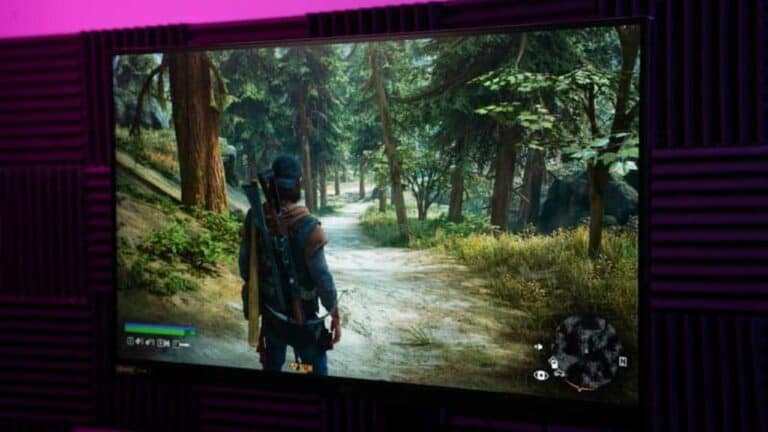

4K Gaming Monitor

4K gaming monitors have become much more affordable over the past decade, with users now having the ability to pick up a 4K gaming monitor for less than $300 – something you couldn’t say until recent times.The best 4K gaming monitor is simply one that has the ability to display a native 4K resolution. Whilst this is great for picture clarity, it can take its toll on your PC if your build isn’t up to scratch. Be aware though, 4K gaming monitors are usually much more expensive than 1080/1440p monitors.

240 Hz Monitor

If you really want the best of the best when it comes to gaming monitors, splashing out that little bit extra for a 240Hz monitor is always a good move. With it’s faster refresh rate, usually paired with other technologies like G-Sync or Freesync, these monitors help take your gaming to the next level. Whilst the difference between 144Hz and 240Hz is less noticeable than the one between 60Hz and 144Hz, but if you want to grab yourselves that competitive edge – this is the way to do it.

360Hz Monitor

With both Nvidia and AMD bringing out powerful new GPUs this year, gaming monitors have seen an upgrade too. We are just beginning to see the first of a new breed of monitors launch into the world with 360Hz monitors now being released from big brands like Asus, Acer, Alienware, and MSI. If you’ve upgraded to a new GPU and really want a beast of a PC setup – then these monitors could be the perfect fit for you.

Gaming Monitor Guides

Best Gaming Monitor Picks

Samsung Odyssey OLED G9 vs Acer Predator X49 X – which is best?

We’ve already discussed why we think that 2024 is the

Best monitor for Dragon’s Dogma 2 – our selection from 1080p to 4K

Many of you may have been waiting a long time

32″ Samsung Odyssey OLED G8 vs 34″ OLED G8 – 4K vs ultrawide

With a whole host of new OLED gaming monitors arriving

Best monitor for Nightingale – 4K, 1440p, ultrawide & budget picks

This open-world survival adventure looks packed full of beautiful visuals,

Best monitor for Skull and Bones – our top picks for best visuals

We’ve been looking forward to this jolly open world experience

Best monitor for Helldivers 2 – 4K, 1440p, and budget picks

With the game making waves on Steam early on, we

Samsung Odyssey OLED G9 (2024) vs OLED G9 (2023) – what’s new?

With Samsung announcing new additions to their Odyssey gaming monitor

32″ Samsung Odyssey OLED G8 vs Alienware AW3225QF

There has been a number of new OLED monitors announced

Samsung Odyssey OLED G6 vs Alienware AW2725DF – which is best?

2024 looks to be a great year for brand-new OLED

Best monitor for Palworld – 4K, 1440p & ultrawide gaming displays

Palworld is one of the biggest games around right now,

57″ Samsung Odyssey Neo G9 vs Acer Predator Z57 – a dual 4K monitor duel

Two titan monitors, but we find out which is best

Best monitor for RTX 4080 Super in 2024: 4K & 1440p monitors for gaming

Ever since Nvidia announced the RTX 4080 Super we’ve been

Best gaming monitor for RX 7600 XT in 2024 – 1080p, 1440p

AMD have released their latest XT variant graphics card, but

Best gaming monitor for RTX 4070 Ti Super in 2024 – our top picks

The GeForce 40-series has gotten a Super makeover, which leaves

Best gaming monitor for RTX 4070 Super in 2024 – 1440p & more

We’ve been looking forward to the RTX 4070 Super release

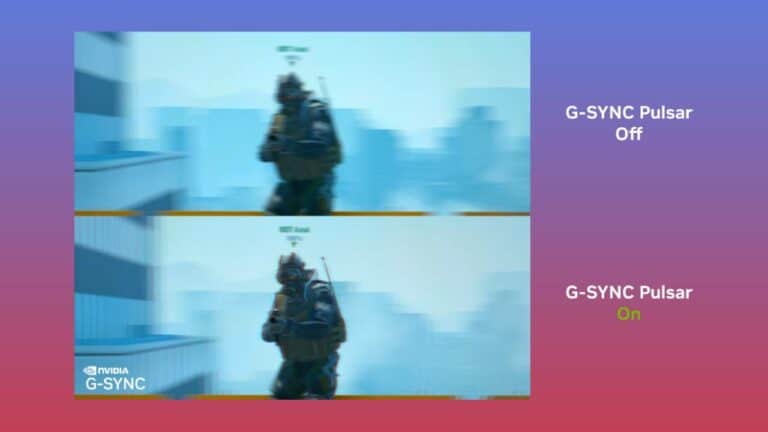

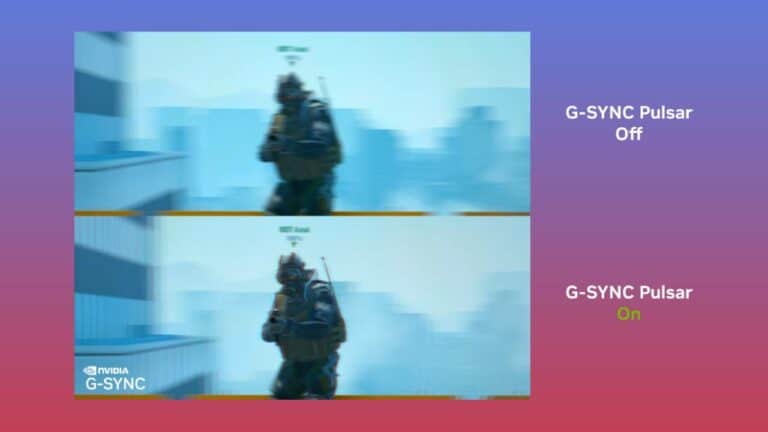

Nvidia G-Sync Pulsar explained – how does it work?

Among all of the impressive hardware releases taking place at

BenQ MOBIUZ EX480UZ monitor review: The best big-screen OLED?

It’s about time we took a closer look at the

Best HDMI 2.1 monitor – our top picks for gaming

If you are looking for the best HDMI 2.1 monitor

Best mini LED monitor in 2024 – our top picks

If you are looking for the best mini LED monitor

Best OLED gaming monitor 2024 (4K, 1440p, curved, 144Hz, 240Hz)

We’ve reviewed some of the market’s best OLED monitors for

Best gaming monitor 2024: our top 10 picks for gamers this April

Looking for the best gaming monitor in 2024? We’ve tested

Best curved monitor for office work in 2024 – best for productivity

Some of the best curved monitors out there are designed

Best Alienware monitor in 2024 (240Hz, ultrawide & more)

Alienware is a subsidiary brand from Dell that has been synonymous with PC

Best gaming monitor under $500 in 2024 – our top picks

On today’s agenda is figuring out the best gaming monitor

Best 5K monitor in 2024 – our top picks for gaming and creative work

If you are looking for the best 5K monitor for

Best gaming monitor under $400 in 2024 – our top picks

If you are looking for the best gaming monitors for

Best 165Hz monitor for gaming 2023 (4K, 1440p, 1080p)

Anyone looking to upgrade their gaming monitor should definitely consider

Best cheap HDMI 2.1 monitor in 2024 – budget picks for 4K 120Hz & more

Looking for the best cheap HDMI 2.1 monitor in 2024

Best 1440p 300Hz monitor 2024 (latest picks & news)

It’s about time we tracked down the best 1440p 300Hz

Best HDMI 2.1 monitor for PS5 in 2024 – 4K 120Hz monitor for PS5

If you’re interested in pushing the PS5 performance to its

Best monitor size for gaming 2024 – display sizes compared

What is the best monitor size for gaming? While it

Best portable OLED monitor 2023 – top small OLED displays

Picking up a small OLED monitor might be just what

Best OLED monitor for Mac – top picks for Mac and MacBook

Today we’re going to take a deep dive into the

Best 360Hz monitor in 2024 – our top picks for gaming

We scour the web to find the best 360Hz gaming

Best OLED monitor for Xbox Series X in 2024 (4K, 120Hz, HDMI 2.1)

We’ve put together this best OLED monitor for Xbox Series

Best OLED ultrawide monitor 2023 (34-inch, 49-inch, bendable)

It’s about time we took a look at the best

Best OLED monitor for productivity 2024 (4K, ultrawide, 49-inch)

When tracking down some of the best OLED monitors on

Best OLED monitor for PS5 in 2024 (4K, 120Hz, HDMI 2.1)

It’s pretty hard to beat some of the best OLED

Best gaming monitor for CS2 in 2024 – top displays for Counter-Strike 2

It’s time to introduce the best gaming monitor for CS2!

Best portable monitor in 2024 – our top picks

If you are in the market for the best portable

Best gaming monitor for Xbox Series S 2024 – top picks reviewed for April

Our expert team has been busy testing and reviewing some

Best gaming monitor for RTX 4080 in 2024 – 4K and 1440p picks

The RTX 4080 is no doubt an impressive graphics card,

Best thin bezel monitor in 2024 – our top borderless picks

If you are looking for the best thin bezel monitor

Best monitor for iRacing 2023 – top displays for sim racing reviewed

iRacing is more than just a racing game, it’s a

Best gaming monitor for RTX 4090 in 2024 (4K, 1440p, ultrawide)

Today we’re searching for the best gaming monitor for RTX

Best monitor for Call of Duty in 2024 (1080p, 1440p, 4K)

Call of Duty. Ah, those few words bring back memories

Best gaming monitor under $100 in 2024 – our top picks

If you are looking for the best gaming monitor for

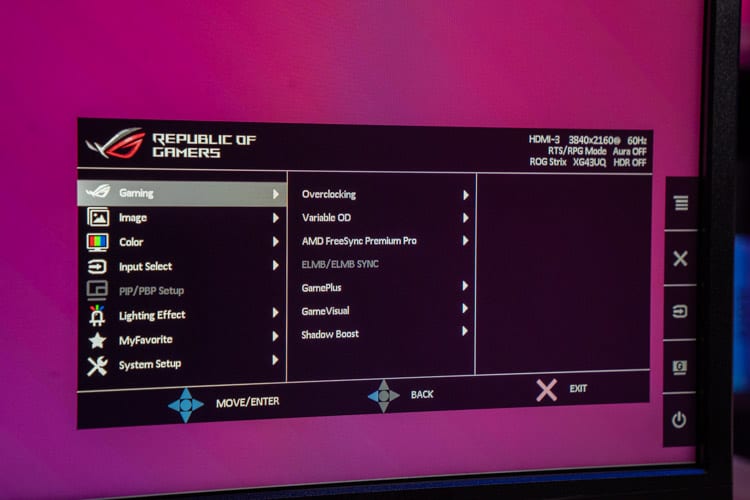

How to overclock your monitor – step by step guide for Nvidia & AMD users in 2024

You may already know you can improve your computer’s performance

Best gaming monitor under $300 in 2024 – our top picks

If you are in the market for the best gaming

Best gaming monitor for RTX 3060 in 2024 – 1440p & 1080p picks

Finding the best gaming monitor for RTX 3060 in 2024

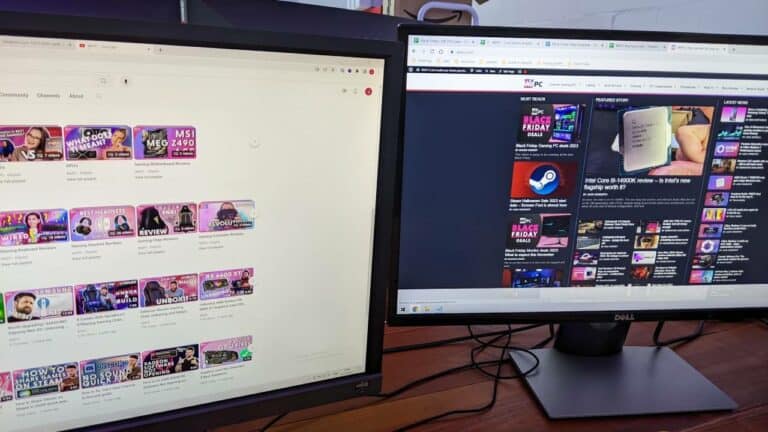

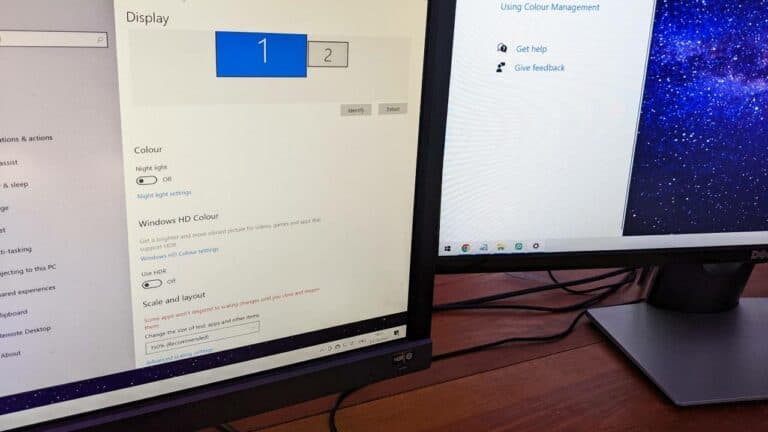

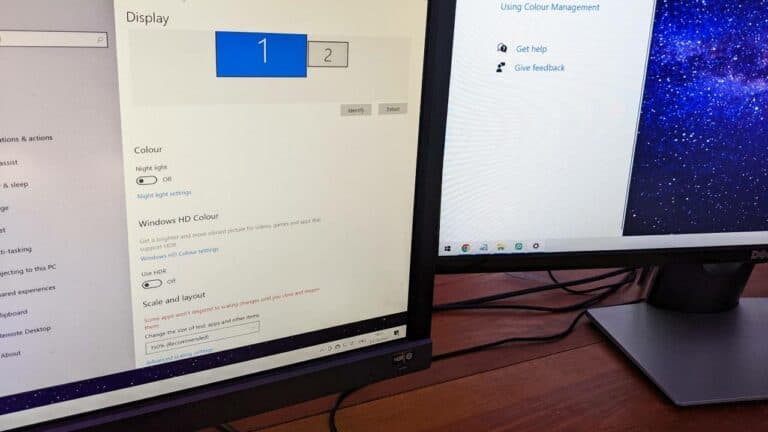

Best dual monitor setup in 2024 (gaming, workstation, curved)

Even though ultrawide monitors are becoming a popular choice, a

Best gaming monitor for RTX 4060 Ti & RTX 4060 in 2024

If you’re looking for the best gaming monitor for the

TV vs monitor – which one is better for gaming?

If you want a new display for your gaming setup,

What to look for when buying a gaming monitor in 2024

So you want to buy a gaming monitor but you

TN vs IPS vs VA – which is the best panel type for gaming?

When it comes to buying gaming monitors (or monitors, in

IPS vs LED monitors – what is the difference?

If you have been looking for a new monitor, then

Best 8K monitor in 2024 – our top available and upcoming picks

If you are looking for the best 8K monitor for

Best ultrawide gaming monitor 2024 (21:9, 32:9, 240Hz, budget) – April edition

We’ve been on the hunt for the best ultrawide gaming

Best FPS gaming monitor in 2024 – our top picks

When it comes to competitive first-person shooters, choosing the best

60Hz vs 144Hz vs 240Hz vs 360Hz – which refresh rate is better for gaming?

If you are looking for a new gaming monitor or

1080p vs 4K gaming monitors – which one is better?

It has been quite a while since 4K gaming monitors

Best gaming monitor for RTX 4070 Ti 2024 (4K, 1440p, ultrawide)

If you’re in the market for the best gaming monitor

Best gaming monitor for RTX 4070 in 2024 (1440p, 4K, ultrawide)

We’ve reviewed a ton of monitors in the past, and

Best 1440p 240Hz monitor 2024 (27-inch, OLED, ultrawide) – updated for April

Finding the best 1440p 240Hz monitor can be a quite tricky prospect

Best triple monitor setup in 2024 and how to set up 3 monitors

Despite gaming monitors now bigger and better than ever, there

Why is my monitor flickering – possible causes and how to fix

If your monitor is flickering and you’re looking for ways

Best cheap 144Hz gaming monitor 2024 – top picks, reviews & buyer’s guide

Looking for the best cheap 144Hz gaming monitor in 2024?

Best gaming monitor under $150 in 2024 – our top picks

If you’re on the hunt for the best gaming monitor under

Best PC Monitor 2023 (1080p, 1440p, 4K)

Monitors have come a long way since the days of

Best monitor for PS5 in 2024 (HDMI 2.1, 4K, 120Hz) – updated for April

What is the best monitor for PS5? Finding the best

Best 4K 144Hz Monitor 2023 – reviews and buyer’s guide

Back in the days when the smallest monitors were as

Best HDR monitor in 2024 – our top picks for gaming

If you are in the market for the best HDR

Best IPS monitor in 2024 – our top picks for gaming

If you are looking for the best IPS gaming monitor

Best 27-inch monitor in 2024 – our top 27″ picks for gaming

If you are looking for the best 27-inch monitor for

Best monitor for Mac mini in 2024 – top picks for Apple desktops

If you are looking for the best monitor for Mac

Best FreeSync Monitor 2023 (4K, console, 240Hz)

Looking for the best FreeSync monitor can be easier said

Best touch screen monitor in 2024 – our top picks

If you are looking for the best touch screen monitor,

Best 240Hz gaming monitor in 2024 – our top picks

Finding the best 240Hz gaming monitor is no easy task

Best 4K curved monitor – buyer’s guide & reviews

When it comes to obtaining maximum immersion from your games,

Best monitor for MacBook Pro: top picks for an Apple setup

Looking for an additional display? We pick out the best

Best 1440p monitor in 2024 – our top QHD picks

We’ve reviewed the market’s best 1440p monitors and have concluded

Best 24-inch monitors in 2024 – our top picks for gaming

The last ten or so years have seen some pretty

Best gaming monitor under $200 in 2024 – great value for gaming

Gaming monitors, for obvious reasons, are one of the most

Best 32-inch monitor in 2024 – our top picks for gaming

When checking out high-quality monitors, the best 32-inch monitor may

Is the Samsung Odyssey OLED G9 good for gaming?

A simple question which may be on your mind: is

Samsung OLED G9 vs ASUS PG49WCD – which OLED ultrawide will be best?

Two impressive ultrawide monitors arrive in 2024, so it’s time

Samsung Odyssey OLED G9 release date & price revealed

The Samsung Odyssey OLED G9 release date is here. This

Best curved gaming monitor 2024 – latest displays reviewed

We’ve tested a ton of curved gaming monitors here at

Best 4K gaming monitor in 2024 – our top UHD picks

If you are looking for the best 4K gaming monitor

Best 144Hz monitor in 2024 – our top picks for gaming

We take a look at the best 144Hz monitor on

Best monitor for Xbox Series X 2024 (4K, HDMI 2.1, 43-inch)

We’ve reviewed hundreds of the market’s best monitors for Xbox

How bright is the Samsung Odyssey OLED G9?

Samsung’s latest high-end monitor is finally released to the world,

Is the Samsung Odyssey OLED G9 worth it?

Samsung Odyssey OLED G9 is a luxurious and high-end gaming

ASUS Monitors & Displays – Versatility, Quality and Clarity

*SPONSORED POST* A good display is absolutely essential for PC

Corsair Xeneon 27QHD240 review: 27 inch OLED gaming just got better

Our resident gaming monitor specialist finally got his hands on

Best console gaming monitor in 2024 – latest displays reviewed in April

The best gaming monitors are different from your standard TVs

Best gaming monitor for Star Wars Jedi: Survivor

So you want to kn ow what the best monitor

LCD vs LED monitor – which one is better for gaming?

While shopping for gaming monitors, you will often come across

Best G-Sync monitors in 2024: Latest displays reviewed

Our team of display experts have reviewed plenty of the

Hands-On Gaming Monitor Reviews

BenQ MOBIUZ EX480UZ monitor review: The best big-screen OLED?

It’s about time we took a closer look at the

Corsair Xeneon 27QHD240 review: 27 inch OLED gaming just got better

Our resident gaming monitor specialist finally got his hands on

Samsung Odyssey Ark Monitor Review

The Samsung Odyssey Ark gaming monitor is by far one

Gigabyte M32U 4K 144Hz gaming monitor review

The Gigabyte M32U is one of the brand’s latest 4K

AOC CQ32G1 Monitor Review | An Affordable 31.5″ Curved Gaming Monitor

As life starts to get back to normal, I finally

Ultimate Buyers Guides

Not sure where to start or what brand is right for you? Then read our Ultimate Buyers Guides, so you know what to look out for when buying.

Nvidia G-Sync Pulsar explained – how does it work?

Among all of the impressive hardware releases taking place at

How to overclock your monitor – step by step guide for Nvidia & AMD users in 2024

You may already know you can improve your computer’s performance

Why is my monitor flickering – possible causes and how to fix

If your monitor is flickering and you’re looking for ways

Is the Samsung Odyssey OLED G9 good for gaming?

A simple question which may be on your mind: is

How bright is the Samsung Odyssey OLED G9?

Samsung’s latest high-end monitor is finally released to the world,

Is the Samsung Odyssey OLED G9 worth it?

Samsung Odyssey OLED G9 is a luxurious and high-end gaming

Does the ROG SWIFT PG27AQDM have burn-in?

Ah, the dreaded burn-in question. It’s a pestilence that has

Does Acer Predator X45 have burn in?

Oh, burn-in! That’s a valid concern when it comes to

What monitor brightness is best for the eyes?

The brightness level of your screen affects not only the

Is the Samsung ViewFinity S9 worth it?

Determining whether the Samsung ViewFinity S9 is worth it can

Does the Samsung ViewFinity S9 have burn in?

You’re thinking of getting the Samsung ViewFinity S9, right? That’s

Are 8K monitors good for gaming? 2023

Wondering whether the latest 8K monitors are good for gaming?

Is the Samsung ViewFinity S9 8K?

Announced back during CES 2023 at the beginning of the

Is the Samsung Neo G95NC worth it?

Samsung Odyssey Neo G9, or G95NC, is a highly-anticipated piece

Is the Corsair Flex worth it?

The Corsair Xeneon Flex is a revolutionary gaming monitor that

Does the Samsung Neo G95NC have burn-in?

The question of burn-in on the Samsung Neo G95NC is

Does the Samsung OLED G9 have burn-in?

Samsung OLED G9 is an upcoming gaming monitor that has

8K monitor resolution, is it worth it?

When it comes to the world of monitors, there’s no

How to clean your monitor screen: Quick guide

We’re here to show you exactly how to clean your

What monitor is compatible with MacBook Air?

A monitor that is compatible with a MacBook Air is

What monitor is best for graphic design?

When choosing a monitor for graphic design, there are three

What monitor is compatible with MacBook Pro?

Many monitors are compatible with a MacBook Pro of any

Why monitors are better for gaming?

Monitors are generally considered to be better for gaming than

Why monitors are curved?

Curved monitors have become increasingly popular in recent years, and

Why monitors have USB ports?

Monitors sometimes come equipped with USB ports to provide users

Why monitors are more expensive than TVs?

There are a few reasons why monitors tend to be

How monitor screen size is measured?

Screen size is measured in inches and refers to the

How To Setup Dual Monitors

More and more people have multi-monitor setups, so knowing how

What monitor can I use with iMac?

Mac computers are all-in-one desktop computers that come with built-in

What monitors do pro gamers use? Pro players monitors explained

Professional gamers, also known as esports players, rely on high-quality

What monitor does Shroud use?

Michael Grzesiek, also known as Shroud, is a popular streamer

What is screen tearing & how to fix screen tear – a complete guide

Display technology can be complicated, so you may be wondering:

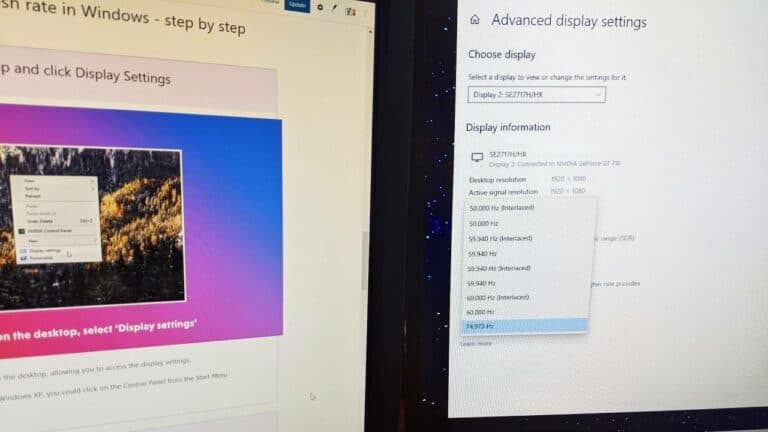

How to check & change monitor refresh rate in Windows in 2024

Your monitor’s refresh rate is a very important factor to

How to fix ‘second monitor not displaying’ issue

There comes a time in everyone’s life when they take

How to measure a computer screen: Quick guide

A computer screen is measured diagonally from one corner to

Is 1440p 4K? The big differences in viewing quality and performance

‘Is 1440p 4K?’ is a question we hear quite regularly

What is overdrive on a monitor? Everything you need to know

Overdrive is a feature that you’ll see on many modern

What is Nano IPS and is it worth it?

One of the big questions we’ve received over the last

What is aspect ratio and why does it matter? (4:3, 16:9, 21:9, 32:9)

Aspect ratios – I know what you’re thinking, boring. While

What Is FreeSync? Everything You Need To Know

What is FreeSync? Historically, monitors were plagued with the screen

How To Test Your Monitor For Backlight Bleed – Backlight Bleed Test

The last thing you want to see as you turn

The Evolution Of Gaming Monitors – Are We Going Backwards?

Over the past couple of decades, gaming monitors have seen

What Makes A Good Gaming Monitor?

Gaming over the last couple of decades has exploded in

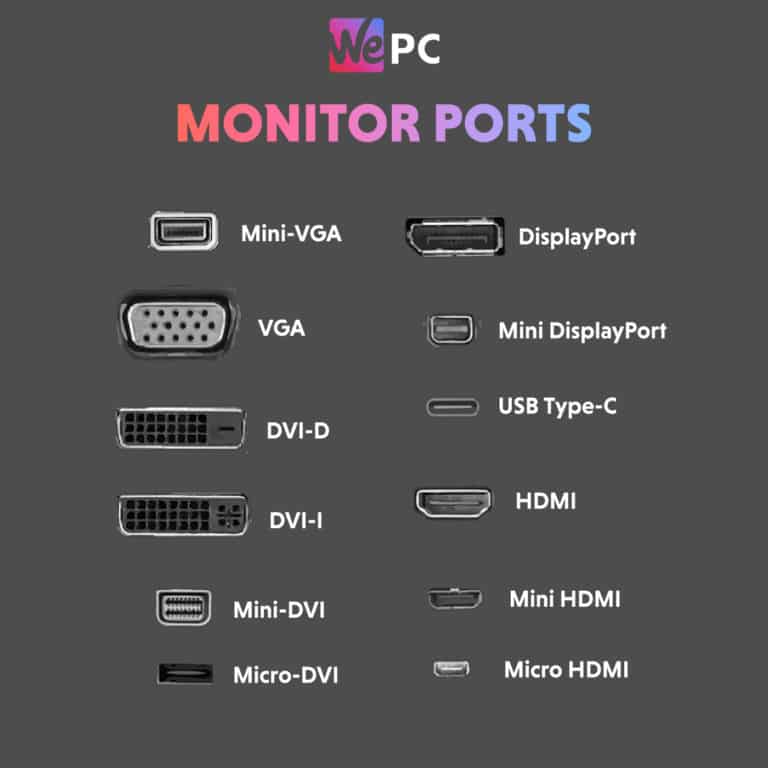

Which cable do I need for 144Hz gaming: Can HDMI do 144Hz?

For many gamers, getting a 144Hz signal to their gaming

Comparison

Read our comparisons of the latest and greatest gaming monitors including IPS, 4K, various refresh rates and ultrawides.

Samsung Odyssey OLED G9 vs Acer Predator X49 X – which is best?

We’ve already discussed why we think that 2024 is the

32″ Samsung Odyssey OLED G8 vs 34″ OLED G8 – 4K vs ultrawide

With a whole host of new OLED gaming monitors arriving

Samsung Odyssey OLED G9 (2024) vs OLED G9 (2023) – what’s new?

With Samsung announcing new additions to their Odyssey gaming monitor

32″ Samsung Odyssey OLED G8 vs Alienware AW3225QF

There has been a number of new OLED monitors announced

Samsung Odyssey OLED G6 vs Alienware AW2725DF – which is best?

2024 looks to be a great year for brand-new OLED

57″ Samsung Odyssey Neo G9 vs Acer Predator Z57 – a dual 4K monitor duel

Two titan monitors, but we find out which is best

TV vs monitor – which one is better for gaming?

If you want a new display for your gaming setup,

TN vs IPS vs VA – which is the best panel type for gaming?

When it comes to buying gaming monitors (or monitors, in

IPS vs LED monitors – what is the difference?

If you have been looking for a new monitor, then

60Hz vs 144Hz vs 240Hz vs 360Hz – which refresh rate is better for gaming?

If you are looking for a new gaming monitor or

1080p vs 4K gaming monitors – which one is better?

It has been quite a while since 4K gaming monitors

Samsung OLED G9 vs ASUS PG49WCD – which OLED ultrawide will be best?

Two impressive ultrawide monitors arrive in 2024, so it’s time

LCD vs LED monitor – which one is better for gaming?

While shopping for gaming monitors, you will often come across

ROG Swift PG27AQDM vs Acer Predator X45

Gaming monitors have come a long way since the days

Samsung G7 vs G9

Samsung, a brand that’s synonymous with top-of-the-line electronics, has long

ASUS PG27AQDM vs PG32UQX: Which display is best?

Many people don’t think of gaming monitors as exciting, but

ASUS PG27AQDM vs PG42UQ: which OLED monitor is best?

So, you’re in the market for a new monitor and

Acer Predator X45 vs Corsair Flex: Which gaming monitor is best?

We’ve wanted to do this one since the announcement of

Alienware AW3423DW vs Alienware AW3821DW

Alienware is well known for its exceptional gaming gear, and

ASUS PG48UQ vs LG C2: Which is the best monitor?

Today we’re delving deep into the world of monitors and

ASUS PG48UQ vs LG 48GQ900-B: Which 48 inch monitor is best?

We have a veritable clash of titans in this article

ASUS PG42UQ vs Alienware AW3423DW

Monitors, monitors, monitors. What’s the difference, you may ask? Well,

Alienware AW3423DW vs Alienware AW3420DW

Well, what better monitor to compare the already great Alienware

ASUS PG42UQ vs ASUS PG48UQ – Which should you buy?

ASUS is a leading light in monitor production and these

Alienware AW3423DW Vs Samsung Neo G9

Today, we are comparing the Alienware AW3423DW and the Samsung

ASUS PG42UQ vs LG C2 – which should you buy?

Gather round, for today we’re going to witness a battle

Hz vs FPS – what’s the difference?

You can draw many similarities when comparing FPS vs Hz.

G-sync vs G-sync compatible – which monitor is better for gaming?

If you are looking for a new gaming monitor and

DVI-I vs DVI-D – what’s the difference, and which one is better?

DVI-I and DVI-D connectors have been used with modern displays

HDR10 vs Dolby Vision – which HDR format is better?

As display technology has evolved, High Dynamic Range (HDR) has

DisplayPort Vs HDMI – which display interface is better?

When you think of a display interface cable, chances are

1080p vs 1080i – which one is better for gaming?

If you’re looking for a new gaming monitor and you’re

Curved vs flat monitor – which one is better?

If you want to settle the “curved monitor vs flat

VR Vs Flat High-Definition Screens

Alright, it’s Summer 2020, and just like every year the

FreeSync vs G-Sync – which monitor is better for gaming?

If you’re into gaming, then chances are that you’re familiar

144Hz vs 240Hz monitor – which is one is better for gaming?

If you want to settle the “144Hz vs 240Hz” debate,

News

New and established brands are coming to market all the time. We are here to cover the latest news. Check back regularly for news on new products, launches deals and brands.

Samsung Odyssey OLED G9 release date & price revealed

The Samsung Odyssey OLED G9 release date is here. This

Does the ROG SWIFT PG27AQDM have burn-in?

Ah, the dreaded burn-in question. It’s a pestilence that has